Mitigating Algorithmic Bias

Team Bye Bye Bias

Diva Agarwal, Yang Cheng, Miley Hu, Jeremy Wang

My role: Project Manager and Research Lead

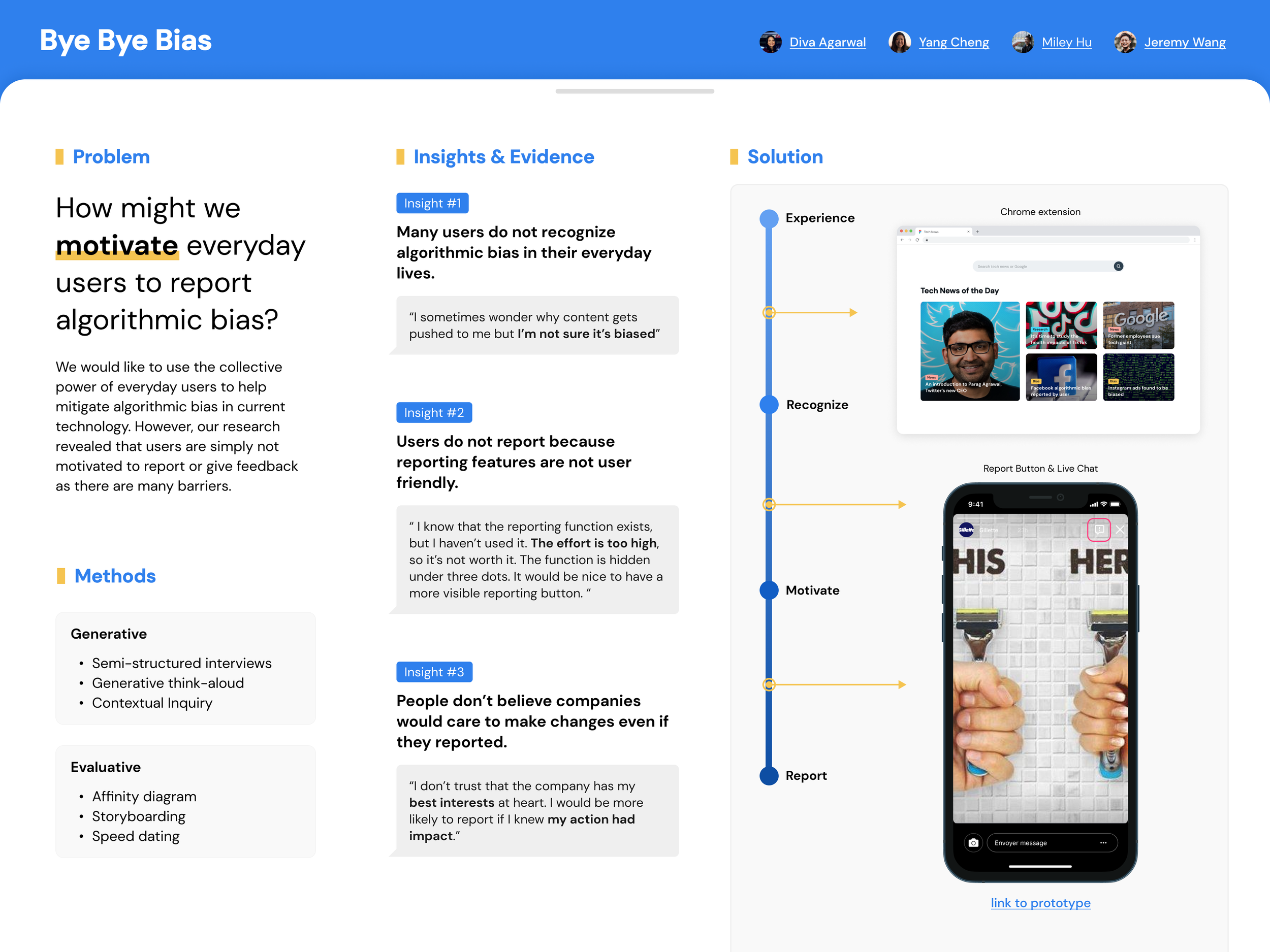

How might we motivate everyday users to report algorithmic bias?

It is important to minimize bias in AI systems through the collective auditing power of everyday users, but do you think that users are likely to report algorithmic bias? Our findings suggest otherwise. We found that users are simply not motivated to report such issues as there are many barriers. Our solution focuses on various ways to reduce these barriers.

Research Methods

We utilized user research methods such as think-aloud for generative research, contextual inquiries, and speed-dating in order to understand current barriers in recognizing and reporting algorithmic bias. This also helped us identify effective motivators to incentivize people to report bias.

“I sometimes wonder why content gets pushed to me and I’m not sure if it’s biased.”

Insight & Problem #1:

Many users do not recognize algorithmic bias in their everyday lives.

Solution:

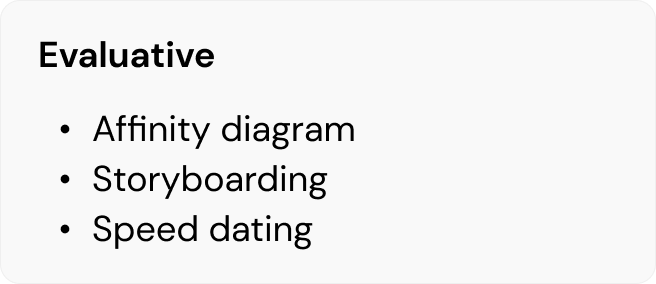

To solve this lack of awareness, we propose a browser extension that “intermixes” educational content about algorithmic bias with tech news. This design implicitly persuades the user to learn about incidents of algorithmic bias and thus be more likely to identify them in the future.

“I know that the reporting function exists, but I haven’t used it. The effort is too high, so it’s not worth it. The function is hidden under three dots. It would be nice to have a more visible reporting button.”

Insight & Problem #2:

Users do not report because reporting features are not user friendly.

Solution:

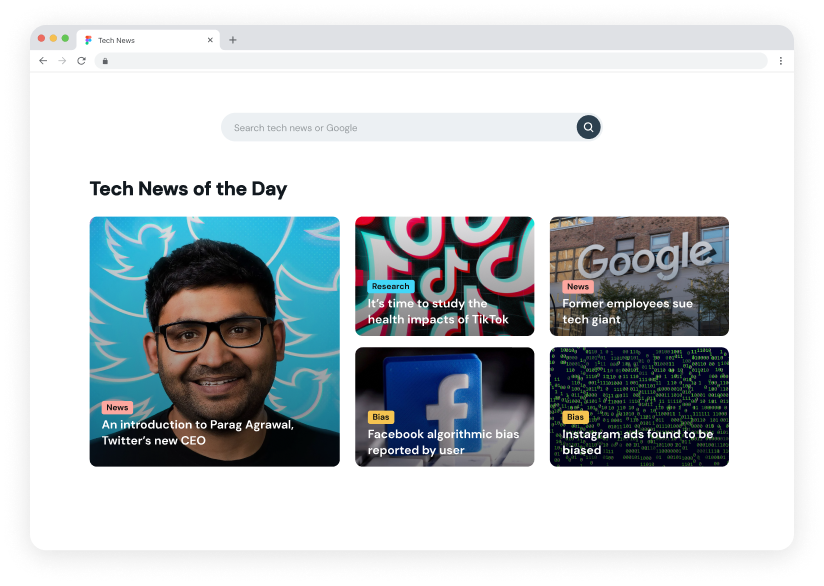

A simple change we propose is to let the report button be at the top-level and easily accessible. This visual reminder keeps the idea of reporting at the top of users’ minds, encouraging more action.

“I don’t trust that the company has my best interests at heart. I would be more likely to report if I knew my action had impact.”

Insight & Problem #3:

People don’t believe companies would care to make changes even if they reported.

Solution:

Through our proposed Instagram live chat feature, users directly connect to a company representative. The representative helps them understand why the bias happened and updates them about what steps are being taken to mitigate it. The user then feels good about the impact they made in mitigating algorithmic bias.

Conclusion

Our solutions will contribute to a better technological future with less algorithmic bias. Everyday users will feel more motivated to report as they will be able to identify more cases of algorithmic bias, have an easier time reporting, and feel more confident about the impact they are making.